Four years ago, I wrote about Renyi's

axiomisation of probability that, in contrast to that of Kolmogorov, takes conditional probability as the fundamental concept. It is timely to revisit the topic given my last post on

Kolmogorov's axioms. In addition, Suarez (whose latest book was also discussed in my last post) appears to endorse the Renyi axioms over those of Kolmogorov although only in a footnote. Stephen Mumford, Rani Lill Anjum and Johan Arnt Myrstad in the book

What Tends to Be, Chapter 6 also follow their analysis of conditional probability in the Kolmogorov axiomisation by taking the view that conditional probabilities should not be reducible to absolute probabilities.

In his Foundations of Probability (1969) Renyi provided an alternative axiomisation to that of Kolmogorov that takes conditional probability as the fundamental notion, otherwise he stays as close as possible to Kolmogorov. As with the Kolmogorov axioms, I shall replace reference to events with possibilities.

Renyi's conditional probability space \((\Omega, \mathfrak{F} (, \mathfrak{G}, P(F | G))\) is defined as follows.

The set \(\Omega\) is the space of elementary possibilities and \(\mathfrak{F}\) is a \(\sigma\)-field of subsets of \(\Omega\) and \(\mathfrak{G}\), a subset of \(\mathfrak{F}\) (called the set of admissible conditions) having the properties:

(a) \( G_1, G_2 \in \mathfrak{G} \Rightarrow G_1 \cup G_2 \in \mathfrak{G}\),

(b) \(\exists \{G_n\}\), a sequence in \(\mathfrak{G}\), such that \(\cup_{n=1}^{\infty} G_n = \Omega,\)

(c) \(\emptyset \notin \mathfrak{G}\),

\(P\) is the conditional probability function satisfying the following four axioms.

R0. \( P : \mathfrak{F} \times \mathfrak{G} \rightarrow [0, 1]\),

R1. \( (\forall G \in \mathfrak{G} ) , P(G | G) = 1.\)

R2. \((\forall G \in \mathfrak{G}) , P(\centerdot | G)\) , is a countably additive measure on \(\mathfrak{F}\).

R3. If \(\forall G_1, G_2 \in \mathfrak{G}, G_2 \subseteq G_1 \Rightarrow P(G_2 | G_1) > 0\),

$$(\forall F \in \mathfrak{F}) P(F|G_2 ) = { \frac{P(F \cap G_2 | G_1)}{P(G_2 | G_1)}}.$$

Several problems have been examined by Stephen Mumford, Rani Lill Anjum and Johan Arnt Myrstad in What Tends to Be, Chapter 6, as part of a critique of the applicability of Kolmogorov's definition of conditional probability to the ontology of dispositions that tend to cause or hinder events. These have been analysed by them using Kolmogorov's absolute probabilities, but without a careful construction of the probability space appropriate for the application. These same examples will be analysed here using both Kolmogorov's and Renyi's formulation.

The first example that indicates a problem with absolute probability (absolute probability will be denoted by \(\mu\) below to avoid confusion with Renyi's function \(P\), the \(\sigma\)-field, \(\mathfrak{F}\) is the same for both).

P1. For \(A, B \in \mathfrak{F}\), let \(\mu(A) = 1\) then \(\mu(A | B) =1\), \(\mu\) is Kolmogorov's absolute probability

Strictly this holds only for sets \(B\) with \(\mu(B) \gt 0\). We can calculate this result from Kolmogorov's conditional probability postulate as follows: since

$$\mu(A \cap B) = \mu(B),$$

$$\mu(A|B) = \mu(A \cap B)/\mu(B) = \mu(B)/\mu(B)=1.$$

This is not problematic within the mathematics but Mumford et al consider it to be if \(\mu(A|B)\) is to be understood as a degree of implication. They claim that there must exist a condition under which the probability of \(A\) decreases. They justify this through an example:

Say we strike a match and it lights. The match is lit and thus the (unconditional) probability of it being lit is one. Still, this does not entail that the match lit given that there was a strong wind. A number of conditions could counteract the match lighting, thus lowering the probability of this outcome. The match might be struck in water, the flammable tip could have been knocked off, the match could have been sucked into a black hole, and so on.

Let us analyse this more closely. Let \(A =\) "the match lights". Then, "The match is lit and thus the (unconditional) probability of it being lit is one." is equivalent to \(\mu(A|A) = 1\). This is not controversial. They go on to bring other considerations into play and, intuitively, it seems evident that whether a match is lit or not will depend on the existing situation. For example, on whether it is wet or dry, windy, or not, and whether the match is stuck effectively. But this enlarges the space the elementary possibilities. In this enlarged probability space, the set \(A\) labelled "the match lights" is

$$ A=\{(\textsf{"the match is lit", "it is windy", "it is dry", "match is struck"}\} \cup \\ \{(\textsf{"the match is lit", "it is not windy", "it is dry", "match is struck"}\} \cup \\ \{(\textsf{"the match is lit", "it is windy", "it is not dry", "match is struck"}\} ......$$

where \(......\) indicates all the other subsets (elementary possibilities) that make up \(A\).

Each elementary possibility is now a 4-tuple and \(A\) is the union of all sets consisting of a single

4-tuple in which the first item is "the match is lit". Similarly, a set can be constructed to represent \(B=\) "match stuck". The probability function over the probability space is constructed from the probabilities assigned to each elementary possibility. An assignment can be made such that

$$ \mu(A|B) =1 \textsf{ or } \mu(A| B^C) =0 $$

where \(B^C\) ("match not struck") is the complement of \(B\) . It would not be a physically or causally feasible allocation of probabilities to have \( \mu(A| B^C) =1 \) whereas \( \mu(A| B^C) =0 \) is. Indeed, a physically valid allocation of probabilities should give \(\mu(A \cap B^C) = \mu(\emptyset) =0\). All Kolmogorov probability assignments of elementary possibilities with "the match is lit" and "match not struck" in the 4-tuples of elementary possibilities should be zero. The Kolmogorov ratio formula for the conditional probability would apply in the case when all the conditions are accommodated in the set of elementary possibilities. Therefore, P1 is not a problem for the Kolmogorov axioms if the probability space is appropriately modelled.

Appropriate modelling is just as relevant when using the Renyi axioms. I addition, as we are working in the context of conditions influencing outcomes, we will not allow outcomes that cannot be influences to be in the set of admissible conditions \(\mathfrak{G}\). This has no effect on the analysis of P1 but, as will be discussed below, is important for modelling causal conditions.

A further problematic consequence of Kolmogorov's conditional probability, according to Mumford et al, is when \(A\) and \(B\) are probabilistically independent

P2. \(\mu (A\cap B)=\mu(A )\mu(B)\) implies \(\mu(A|B)=\mu(A).\)

This is indeed a consequence of Kolmogorov's definition. Renyi's formulation does not allow this analysis to be carried out, unless \(\mathfrak{G} = \mathfrak{F}\). Mumford et al illustrate there concern through an example

The dispositional properties of solubility and sweetness are not generally thought to be probabilistically dependent.

Whatever is generally thought, the mathematical analysis will depend on the probability model. If two properties are probabilistically independent, then that should be captured in the model. However the objections of Mumford et al are combined with a criticism of Adam's Thesis

Assert (if B then A) = \(P\)(if B then A) =\(P\)(A given B) = \(P(A|B)\)

where \(P(A|B)\) is given by the Kolmogorov ratio formula. However, it should be remembered that the Kolmogorov ratio formula can be simply showing correlation and not that B causes A or that B implies A to any degree. I do not want to get into defending or challenging this thesis here but within the Renyi axiomisation the Kolmogorov conditional probability formula only holds under special conditions, see R3. Independence, in Renyi's scheme, is only defined with reference to some conditioning set, \(C\) say. In which case probabilistic independence is described by the condition

$$ P(A \cap B |C) = P(A|C)P(B|C)$$

and as a consequence, it is only if \(B \subseteq C\) that

$$P(A|B) =\frac{P(A \cap B | C)}{P(B | C)} = P(A|C)$$

This means that if a set \(D\) in \(\mathfrak{G}\) that is not a subset of \(C\) is used to condition \(A \cap B\) then, in general,

$$P(A \cap B |D) \neq P(A|D)P(B|D)$$

even if

$$P(A \cap B |C) = P(A|C)P(B|C).$$

This shows that in the Renyi axiomisation statistical independence is not an absolute property of two sets.

The third objection is that regardless of the probabilistic relation between \(A\) and \(B\), a third consequence of the Kolmogorov conditional probability definition is that whenever the probability of \(A\) and \(B\) is high \(\mu(A|B)\) is high and so is \(\mu(B|A)\):

P3. \((\mu(A \cap B) \sim 1) \Rightarrow((\mu(A|B) \sim 1) \land \mu(B|A) \sim 1)).\)

If \(\mu(A \cap B) \sim 1\) then \(A \equiv B\) but for a set of measure zero. Then \(\mu(A) \sim 1\) and \(\mu(B) \sim 1\) that implies the statement in P3. Mumford et al object

The probability of the conjunction of ‘the sun will rise tomorrow’ and ‘snow is white’ is high. But this doesn’t necessarily imply that the sun rising tomorrow is conditional upon snow being white, or vice versa.

That may the case but the correlation between situations where both are the case is high. Once again, the problem is the identification of conditional probability with a degree of implication in Adam's Thesis. But it is well known that conditional probability may simply capture correlation. If we want to separate conditioning sets from other sets that are consequences in the \(\sigma\)-algebra generated by all elementary possibilities, then Renyi's axioms allow this.

The Renyi equivalent of P3 is

$$ P(A \cap B|C) \sim 1 \Rightarrow (P(A|B) \sim 1, B \subseteq C) \land (P(B|A) \sim 1, A \subseteq C$$

It does holds, when both \(A\) and \(B\) are subsets of \(C\) but that is then a reasonable conclusion for the case of both \(A\) and \(B\) included in \(C\). However, if one of the sets is not a subset of C then it will not hold in general.

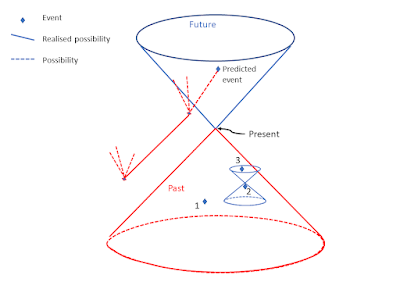

When \(\mathfrak{G}\) is a smaller set than \(\mathfrak{F}\) it becomes useful for causal conditioning. We can exclude sets from \(\mathfrak{G}\) that are outcomes and include sets that are causes. If we are interested in causes of snow be white, we will condition in facts of crystallography and local conditions that may turn snow yellow, as pointed out by Frank Zappa.

For the earlier example above the set \(A\), "the match lights" would not be included in \(\mathfrak{G}\). So for \(C \in \mathfrak{G}, P(A|C)\) is a defined probability measure but \(P(C|A)\) is not.

The Kolmogorov axioms are good for modelling situations where measurable sets represent events of the same status. If there are reasons to believe that some sets have the status of causal conditions for other sets then they should be modelled with Renyi's axiomisation (or some similar axiomisation) as subsets of the set of admissible conditions.

The next question is whether adopting, and modelling fully, with the Renyi scheme allows a counter to objections such as those of Humphreys (Humphreys, P. (1985) ‘Why Propensities Cannot Be Probabilities’, Philosophical Review, 94: 557–70.) to using conditional probabilities to represent dispositional probabilities.